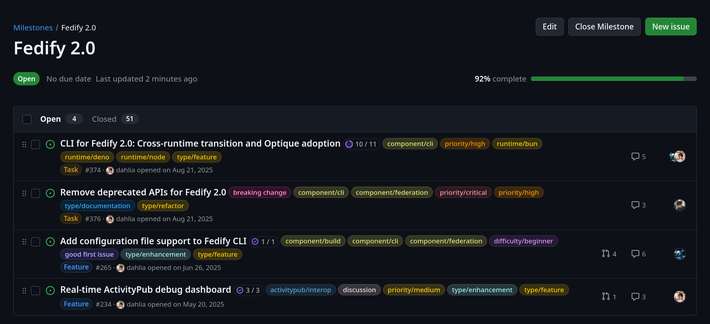

"Fedify 2.0.0 está aqui!

Esta é a maior atualização da história do Fedify. Destaques:

**Arquitetura modular** – O pacote monolítico `@fedify/fedify` foi dividido em pacotes independentes e focados: `@fedify/vocab`, `@fedify/vocab-runtime`, `@fedify/vocab-tools`, `@fedify/webfinger` e outros. Pacotes menores, imports mais limpos e a possibilidade de estender o ActivityPub com tipos de vocabulário personalizados.

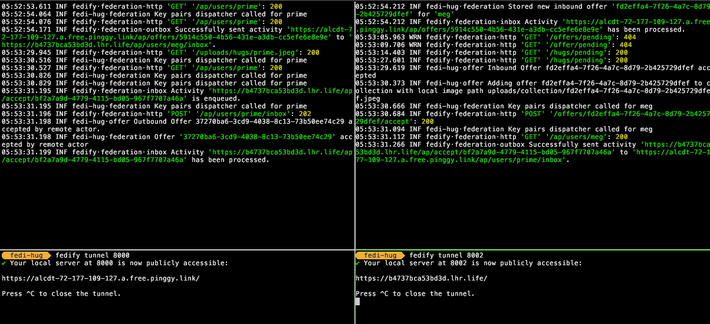

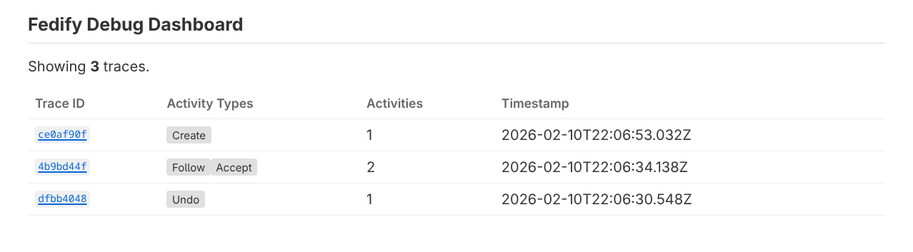

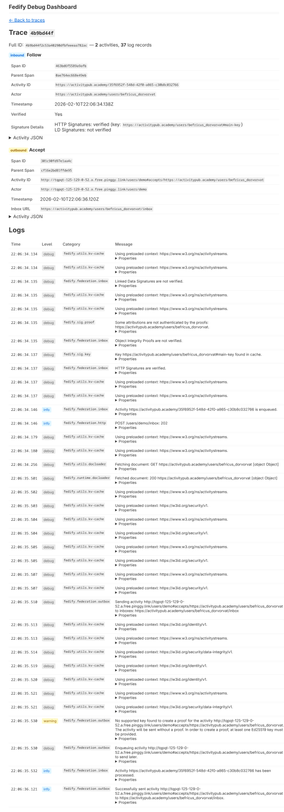

**Painel de depuração em tempo real** – O novo pacote `@fedify/debugger` oferece um dashboard ao vivo em `/__debug__/` que mostra todo o tráfego de federação: traces, detalhes das atividades, verificação de assinaturas e logs correlacionados. Basta envolver seu objeto `Federation` e pronto.

**Suporte a relay do ActivityPub** – Suporte nativo a relays via `@fedify/relay` e o comando CLI `fedify relay`. Compatível com os protocolos Mastodon-style e LitePub-style (FEP-ae0c).

**Entrega ordenada de mensagens** – A nova opção `orderingKey` resolve o problema do "post zumbi", quando um `Delete` chega antes do seu `Create`. Atividades com a mesma chave são entregues garantidamente na ordem FIFO.

**Tratamento de falhas permanentes** – `setOutboxPermanentFailureHandler()` permite reagir quando uma inbox remota retorna 404 ou 410, possibilitando limpar seguidores inacessíveis em vez de tentar reenviar indefinidamente.

Outras novidades incluem negociação de conteúdo no nível do middleware, `@fedify/lint` para regras compartilhadas de linting, `@fedify/create` para scaffolding rápido de projetos, arquivos de configuração para a CLI, suporte nativo à CLI em Node.js/Bun e diversos fixes de bugs.

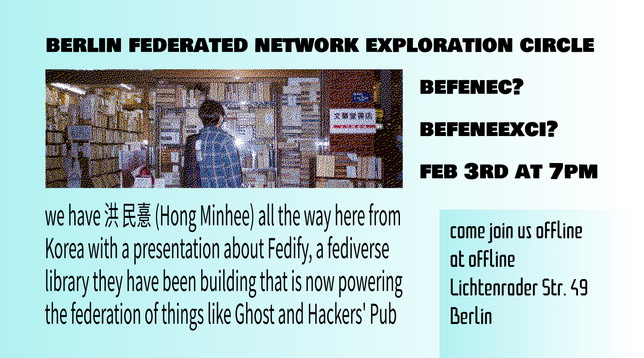

Esta versão conta com contribuições significativas de participantes do OSSCA da Coreia. Agradecemos imensamente a todos envolvidos!

Trata-se de uma release major com breaking changes. Consulte o guia de migração antes de atualizar.

Notas completas da release: https://github.com/fedify-dev/fedify/discussions/580

#Fedify #ActivityPub #fediverso #fedidev #TypeScript"

@fediverse @tecnologia @academicos @internet (pode seguir para acompanhar os assuntos ou marcar para amplificar a postagem até no #lemmy tb)

@fedify https://hollo.social/@fedify/019c8521-92ef-7d5f-be4d-c50eae575742