Fedify 1.9.0: Security enhancements, improved DX, and expanded framework support

We are excited to announce Fedify 1.9.0, a mega release that brings major security enhancements, improved developer experience, and expanded framework support. Released on October 14, 2025, this version represents months of collaborative effort, particularly from the participants of Korea's OSSCA (Open Source Contribution Academy).

This release would not have been possible without the dedicated contributions from OSSCA participants: Jiwon Kwon (@z9mb1), Hyeonseo Kim (@gaebalgom), Chanhaeng Lee (@2chanhaeng), Hyunchae Kim (@r4bb1t), and An Subin (@nyeong). Their collective efforts have significantly enhanced Fedify's capabilities and made it more robust for the fediverse community.

Origin-based security model

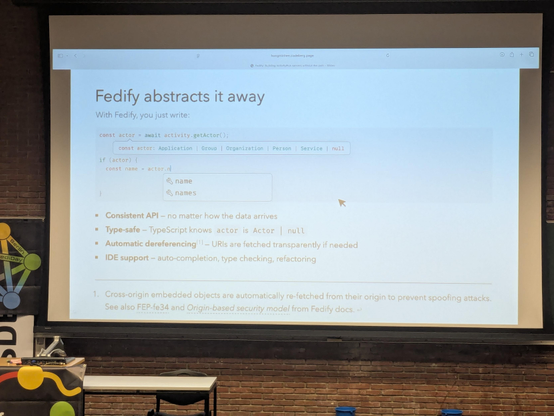

Fedify 1.9.0 implements FEP-fe34, an origin-based security model that protects against content spoofing attacks and ensures secure federation practices. This critical security enhancement enforces same-origin policy for ActivityPub objects and their properties, preventing malicious actors from impersonating content from other servers.

The security model introduces a crossOrigin option in Activity Vocabulary property accessors (get*() methods) with three security levels:

// Default behavior: logs warning and returns null for cross-origin content

const actor = await activity.getActor({ crossOrigin: "ignore" });

// Strict mode: throws error for cross-origin content

const object = await activity.getObject({ crossOrigin: "throw" });

// Trust mode: bypasses security checks (use with caution)

const attachment = await note.getAttachment({ crossOrigin: "trust" });

Embedded objects are automatically validated against their parent object's origin. When an embedded object has a different origin, Fedify performs automatic remote fetches to ensure content integrity. This transparent security layer protects your application without requiring significant code changes.

For more details about the security model and its implications, see the origin-based security model documentation.

Enhanced activity idempotency

Activity idempotency handling has been significantly improved with the new withIdempotency() method. This addresses a critical issue where activities with the same ID sent to different inboxes were incorrectly deduplicated globally instead of per-inbox.

federation

.setInboxListeners("/inbox/{identifier}", "/inbox")

.withIdempotency("per-inbox") // New idempotency strategy

.on(Follow, async (ctx, follow) => {

// Each inbox processes activities independently

});

The available strategies are:

"per-origin": Current default for backward compatibility"per-inbox": Recommended strategy (will become default in Fedify 2.0)- Custom strategy function for advanced use cases

This enhancement ensures that shared inbox implementations work correctly while preventing duplicate processing within individual inboxes. For more information, see the activity idempotency documentation.

Relative URL resolution

Fedify now intelligently handles ActivityPub objects containing relative URLs, automatically resolving them by inferring the base URL from the object's @id or document URL. This improvement significantly enhances interoperability with ActivityPub servers that use relative URLs in properties like icon.url and image.url.

// Previously required manual baseUrl specification

const actor = await Actor.fromJsonLd(jsonLd, { baseUrl: new URL("https://example.com") });

// Now automatically infers base URL from object's @id

const actor = await Actor.fromJsonLd(jsonLd);

This change, contributed by Jiwon Kwon (@z9mb1), eliminates a common source of federation failures when encountering relative URLs from other servers.

Full RFC 6570 URI template support

TypeScript support now covers all RFC 6570 URI Template expression types in dispatcher path parameters. While the runtime already supported these expressions, TypeScript types previously only recognized simple string expansion.

// Now fully supported in TypeScript

federation.setActorDispatcher("/{+identifier}", async (ctx, identifier) => {

// Reserved string expansion — recommended for URI identifiers

});

The complete set of supported expression types includes:

{identifier}: Simple string expansion{+identifier}: Reserved string expansion (recommended for URIs){#identifier}: Fragment expansion{.identifier}: Label expansion{/identifier}: Path segments{;identifier}: Path-style parameters{?identifier}: Query component{&identifier}: Query continuation

This was contributed by Jiwon Kwon (@z9mb1). For comprehensive information about URI templates, see the URI template documentation.

WebFinger customization

Fedify now supports customizing WebFinger responses through the new setWebFingerLinksDispatcher() method, addressing a long-standing community request:

federation.setWebFingerLinksDispatcher(async (ctx, actor) => {

return [

{

rel: "http://webfinger.net/rel/profile-page",

type: "text/html",

href: actor.url?.href,

},

{

rel: "http://ostatus.org/schema/1.0/subscribe",

template: "https://example.com/follow?uri={uri}",

},

];

});

This feature was contributed by Hyeonseo Kim (@gaebalgom), and enables applications to add custom links to WebFinger responses, improving compatibility with various fediverse implementations. Learn more in the WebFinger customization documentation.

New integration packages

Fastify support

Fedify now officially supports Fastify through the new @fedify/fastify package:

import Fastify from "fastify";

import { fedifyPlugin } from "@fedify/fastify";

const fastify = Fastify({ logger: true });

await fastify.register(fedifyPlugin, {

federation,

contextDataFactory: () => ({ /* your context data */ }),

});

This integration was contributed by An Subin (@nyeong). It supports both ESM and CommonJS, making it accessible to all Node.js projects. See the Fastify integration guide for details.

Koa support

Koa applications can now integrate Fedify through the @fedify/koa package:

import Koa from "koa";

import { createMiddleware } from "@fedify/koa";

const app = new Koa();

app.use(createMiddleware(federation, (ctx) => ({

user: ctx.state.user,

// Pass Koa context data to Fedify

})));

The integration supports both Koa v2.x and v3.x. Learn more in the Koa integration documentation.

Next.js integration

The new @fedify/next package brings first-class Next.js support to Fedify:

// app/api/ap/[...path]/route.ts

import { federation } from "@/federation";

import { fedifyHandler } from "@fedify/next";

export const { GET, POST } = fedifyHandler(federation);

This integration was contributed by Chanhaeng Lee (@2chanhaeng). It works seamlessly with Next.js App Router. Check out the Next.js integration guide for complete setup instructions.

CommonJS support

All npm packages now support both ESM and CommonJS module formats, resolving compatibility issues with various Node.js applications and eliminating the need for the experimental --experimental-require-module flag. This particularly benefits NestJS users and other CommonJS-based applications.

FEP-5711 collection inverse properties

Fedify now implements FEP-5711, adding inverse properties to collections that provide essential context about collection ownership:

const collection = new Collection({

likesOf: note, // This collection contains likes of this note

followersOf: actor, // This collection contains followers of this actor

// … and more inverse properties

});

This feature was contributed by Jiwon Kwon (@z9mb1). The complete set of inverse properties includes likesOf, sharesOf, repliesOf, inboxOf, outboxOf, followersOf, followingOf, and likedOf. These properties improve data consistency and enable better interoperability across the fediverse.

CLI enhancements

NodeInfo visualization

The new fedify nodeinfo command provides a visual way to explore NodeInfo data from fediverse instances. This replaces the deprecated fedify node command and offers improved parsing of non-semantic version strings. Try it with:

fedify nodeinfo https://comam.es/snac/

This was contributed by Hyeonseo Kim (@gaebalgom). The command now correctly handles various version formats and provides a cleaner visualization of instance capabilities. See the CLI documentation for more options.

Enhanced lookup with timeout

The fedify lookup command now supports a timeout option to prevent hanging on slow or unresponsive servers:

fedify lookup --timeout 10 https://example.com/users/alice

This enhancement, contributed by Hyunchae Kim (@r4bb1t), ensures reliable operation even when dealing with problematic remote servers.

Package modularization

Several modules have been separated into dedicated packages to improve modularity and reduce bundle sizes. While the old import paths remain for backward compatibility, we recommend migrating to the new packages:

@fedify/cfworkers replaces @fedify/fedify/x/cfworkers@fedify/denokv replaces @fedify/fedify/x/denokv@fedify/hono replaces @fedify/fedify/x/hono@fedify/sveltekit replaces @fedify/fedify/x/sveltekit

This modularization was contributed by Chanhaeng Lee (@2chanhaeng). The old import paths are deprecated and will be removed in version 2.0.0.

Acknowledgments

This release represents an extraordinary collaborative effort, particularly from the OSSCA participants who contributed numerous features and improvements. Their dedication and hard work have made Fedify 1.9.0 the most significant release to date.

Special thanks to all contributors who helped shape this release, including those who provided feedback, reported issues, and tested pre-release versions. The fediverse community's support continues to drive Fedify's evolution.

For the complete list of changes, bug fixes, and improvements, please refer to the CHANGES.md file in the repository.

@技術・雑談

@技術・雑談