Frank Goossens' blog

@frank@blog.futtta.be

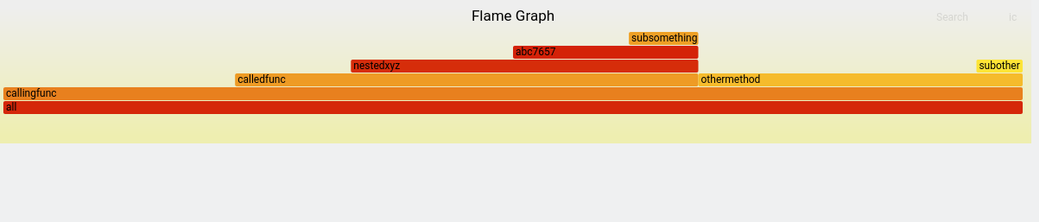

Being the web performance zealot I am, I strive to having as little JavaScript on my sites as possible. JavaScript after all has to be downloaded and has to be executed, so extra JS will always have a performance impact even when in the best of circumstances it exceptionally does not impact Core Web Vitals (which are a snapshot of the bigger performance and sustainability picture). Hence when adding blocks in WordPress, I check if the block is entirely rendered server-side and if not I look […]

Being the web performance zealot I am, I strive to having as little JavaScript on my sites as possible. JavaScript after all has to be downloaded and has to be executed, so extra JS will always have a performance impact even when in the best of circumstances it exceptionally does not impact Core Web Vitals (which are a snapshot of the bigger performance and sustainability picture). Hence when adding blocks in WordPress, I check if the block is entirely rendered server-side and if not I look for alternatives to avoid multiple files from wp-includes/js/dist (and in the case of some 3rd party blocks the entire React JS and more) being loaded.

For that reason I tested the WordPress ActivityPub plugin with the reactions block loaded as per these guidelines and indeed it triggers the loading of hooks.min.js, i18n.min.js, url.min.js, api-fetch.min.js (all in wp-includes/js/dist) and 2 files from the plugin itself (/wp-content/plugins/activitypub/build/reactions/view.js and /wp-content/plugins/activitypub/build/remote-reply/view.js).

To be able to reduce the dependency on those JavaScript files, 2 questions needed to be answered; how to have reactions (which I like a lot) without the JavaScript-driven rendering and what is that remote-reply thing.

To be able to reduce the dependency on those JavaScript files, 2 questions needed to be answered; how to have reactions (which I like a lot) without the JavaScript-driven rendering and what is that remote-reply thing.

Starting with the latter; “remote-reply” handles the federation of local comments on reactions (comments) from the Fediverse, showing a modal window where the commenter is asked what ActivityPub account they want to post the reaction from. I decided this was not that important for me and –with some help from Matthias @pfefferle who always gives great support- came up with a couple of lines of code to not “do” remote-reply on this blog.

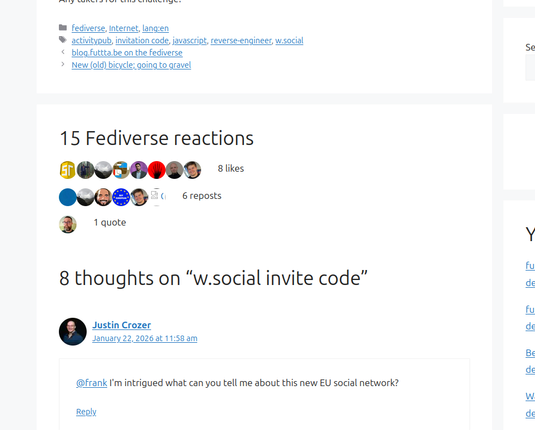

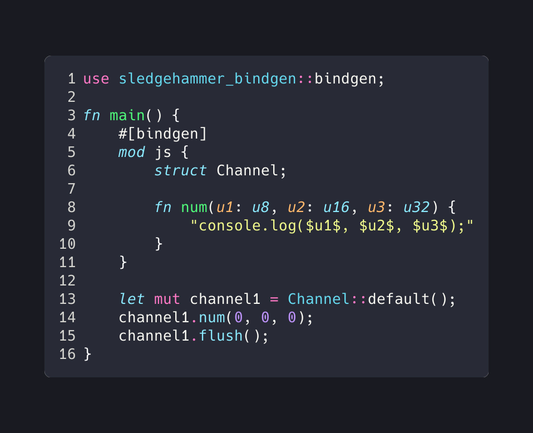

Now that Fediverse reactions block is very nice and I did want reactions showing on my blog, so I started looking at the database and the ActivityPub plugin code and saw that all Fediverse reactions were stored in the wp-comment en wp-comment-meta db-tables and were in fact accessible with the WP_Comment_Query class and with quite a bit of trial and error I ultimately ended up with a totally server-side generated solution that looked pretty nice (and similar to the JavaScript-rendered one).

If you’re interested, you can find the code in this gist, but don’t expect it to be good. Some negatives include no language handling, unminified CSS inline and the placement of the reactions might not work on every theme as I hook into the comments_template action to try to show them just before the comments. But who knows it might just work for you as well?

Qiita - 人気の記事

Qiita - 人気の記事