APB Boo (Spooky Version)

@APBBlue@thepit.social

@APBBlue@thepit.social

@fabio@cosocial.ca

#Claude is down this morning. Today we’ll find out who’s still a software engineer lol

@rolle@mementomori.social

Anthropic's models have been down most of the Monday. I wonder if that has anything to do with the recent events.

https://www.theguardian.com/technology/2026/feb/28/openai-us-military-anthropic

@rolle@mementomori.social

Anthropic's models have been down most of the Monday. I wonder if that has anything to do with the recent events.

https://www.theguardian.com/technology/2026/feb/28/openai-us-military-anthropic

@jbz@indieweb.social

🥸 Pentagon used Anthropic's Claude during Maduro raid

https://www.axios.com/2026/02/13/anthropic-claude-maduro-raid-pentagon

@jbz@indieweb.social

🥸 Pentagon used Anthropic's Claude during Maduro raid

https://www.axios.com/2026/02/13/anthropic-claude-maduro-raid-pentagon

@petersuber@fediscience.org · Reply to petersuber's post

Update. Employees of #Google and #OpenAi just released an open letter supporting #Anthropic.

https://notdivided.org/

"We hope our leaders will put aside their differences and stand together to continue to refuse the Department of War's current demands for permission to use our models for domestic mass surveillance and autonomously killing people without human oversight."

The letter welcomes new signatures from past and present employees of Google and OpenAI.

At the time of this post, it had 684 signatures.

@gnrz@theforkiverse.com

When can we have Claude in a teddy?

@petersuber@fediscience.org · Reply to petersuber's post

Update. "#SamAltman says #OpenAI shares #Anthropic's red lines in #Pentagon fight."

https://archive.is/5sTBa

@petersuber@fediscience.org · Reply to petersuber's post

Update. #Anthropic just 𝗿𝗲𝗷𝗲𝗰𝘁𝗲𝗱 #Pentagon demands to remove safeguards on #Claude that limit its use in mass surveillance and autonomous weapons. Here's the statement from CEO #DarioAmodei.

https://www.anthropic.com/news/statement-department-of-war

@donelias@mastodon.cr

Un cibercriminal manipula al chatbot Claude para infiltrarse en agencias del gobierno mexicano

Un atacante usó IA para robar 150 GB de datos del gobierno mexicano, exponiendo cómo la automatización potencia tanto el cibercrimen como las defensas digitales.

@petersuber@fediscience.org

Ugh. "Anthropic Drops Flagship Safety Pledge."

https://time.com/7380854/exclusive-anthropic-drops-flagship-safety-pledge/

It's not yet clear what this means for the high-stakes negotiation between Anthropic and the Pentagon. Two of the Anthropic sticking points have been that Claude not be used for "mass surveillance or autonomous weapons systems that can use AI to kill people without human input."

https://www.theguardian.com/us-news/2026/feb/24/anthropic-claude-military-ai

#AI #Anthropic #Claude #Hegseth #LLMs #Pentagon #USPol #USPolitics

@meltforce@theforkiverse.com

@meltforce@theforkiverse.com

@MissConstrue@mefi.social

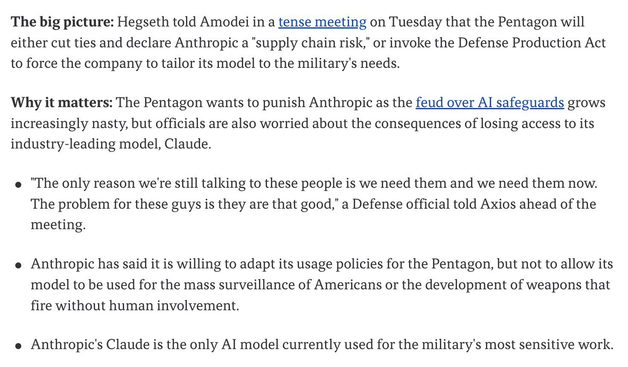

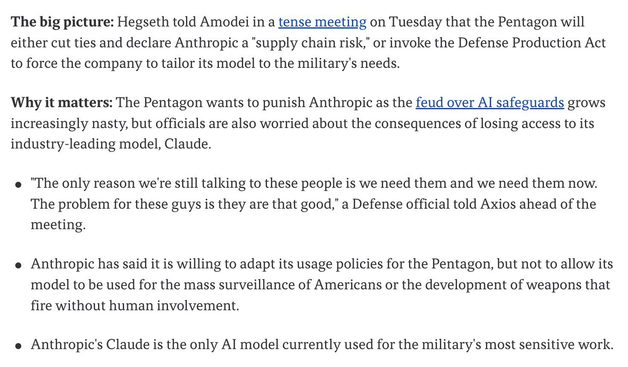

RED ALERT! You remember a couple of days ago when I said #Anthropic was refusing to give the Secretary of Scotch the ability to 1) engage in mass surveillance of Americans and 2) shoot without human intervention?

Pete Hegseth has now said the government will seize the means of #AI production, if Anthropic doesn’t let him kill Americans using #Claude.

Axios link: https://www.axios.com/2026/02/24/anthropic-pentagon-claude-hegseth-dario

Archive link: https://archive.ph/aqUU9

h/t Marcy wheeler

@MissConstrue@mefi.social

RED ALERT! You remember a couple of days ago when I said #Anthropic was refusing to give the Secretary of Scotch the ability to 1) engage in mass surveillance of Americans and 2) shoot without human intervention?

Pete Hegseth has now said the government will seize the means of #AI production, if Anthropic doesn’t let him kill Americans using #Claude.

Axios link: https://www.axios.com/2026/02/24/anthropic-pentagon-claude-hegseth-dario

Archive link: https://archive.ph/aqUU9

h/t Marcy wheeler

@emma@orbital.horse

@zenn_trend_bot@silicon.moe · Reply to Zenn Trends's post

📰 「Markdownだけで」顧客提案レベルのスライドを作ってみた【Slidev x Claude Opus 4.6】 (👍 66)

🇬🇧 Creating professional presentation slides using only Markdown with Slidev and Claude Opus

🇰🇷 Slidev와 Claude Opus를 활용해 Markdown만으로 전문 프레젠테이션 슬라이드 제작

@zenn_trend_bot@silicon.moe · Reply to Zenn Trends's post

📰 「Markdownだけで」顧客提案レベルのスライドを作ってみた【Slidev x Claude Opus 4.6】 (👍 66)

🇬🇧 Creating professional presentation slides using only Markdown with Slidev and Claude Opus

🇰🇷 Slidev와 Claude Opus를 활용해 Markdown만으로 전문 프레젠테이션 슬라이드 제작

@zenn_trend_bot@silicon.moe · Reply to Zenn Trends's post

@zenn_trend_bot@silicon.moe

🕐 2026-02-13 15:57 UTC

📰 Claudeちゃんと夜のお散歩をしてみた (👍 89)

🇬🇧 Embodied AI experiment: Claude connected to camera and sensors for real-world interaction and night walks

🇰🇷 실체화 AI 실험: 카메라와 센서를 연결한 Claude가 실제 세계와 상호작용하며 밤 산책 체험

🔗 https://zenn.dev/nextbeat/articles/2026-02-embodied-claude-walk

@ojrask@piipitin.fi

"AI"-users are spamming so many shitty PRs that GitHub is considering allowing repository owners to outright disable the pull request system on their repos to prevent low-quality contributions from reaching them.

https://github.com/orgs/community/discussions/185387

#AI #LLM #Copilot #Claude #ChatGPT #GitHub #Microsoft #Microslop

@ojrask@piipitin.fi

"AI"-users are spamming so many shitty PRs that GitHub is considering allowing repository owners to outright disable the pull request system on their repos to prevent low-quality contributions from reaching them.

https://github.com/orgs/community/discussions/185387

#AI #LLM #Copilot #Claude #ChatGPT #GitHub #Microsoft #Microslop

@wwhitlow@indieweb.social

Who are these eminent philosophers?

Anthropic describes this constitution as being written for Claude. Described as being "optimized for precision over accessibility." However, on a major philosophical claim it is clear that there is a great deal of ambiguity on how to even evaluate this. Eminent philosophers is an appeal to authority. If they are named, then it is possible to evaluate their claims in context. This is neither precise nor accessible.

@jzb@hachyderm.io

A thought that popped into my head when I woke up at 4 am and couldn’t get back to sleep…

Imagine that AI/LLM tools were being marketed to workers as a way to do the same work more quickly and work fewer hours without telling their employers.

“Use ChatGPT to write your TPS reports, go home at lunchtime. Spend more time with your kids!” “Use Claude to write your code, turn 60-hour weeks into four-day weekends!” “Collect two paychecks by using AI! You can hold two jobs without the boss knowing the difference!”

Imagine if AI/LLM tools were not shareholder catnip, but a grassroots movement of tooling that workers were sharing with each other to work less. Same quality of output, but instead of being pushed top-down, being adopted to empower people to work less and “cheat” employers.

Imagine if unions were arguing for the right of workers to use LLMs as labor saving devices, instead of trying to protect members from their damage.

CEOs would be screaming bloody murder. There’d be an overnight industry in AI-detection tools and immediate bans on AI in the workplace. Instead of Microsoft CoPilot 365, Satya would be out promoting Microsoft SlopGuard - add ons that detect LLM tools running on Windows and prevent AI scrapers from harvesting your company’s valuable content for training.

The media would be running horror stories about the terrible trend of workers getting the same pay for working less, and the awful quality of LLM output. Maybe they’d still call them “hallucinations,” but it’d be in the terrified tone of 80s anti-drug PSAs.

What I’m trying to say in my sleep-deprived state is that you shouldn’t ignore the intent and ill effects of these tools. If they were good for you, shareholders would hate them.

You should understand that they’re anti-worker and anti-human. TPTB would be fighting them tooth and nail if their benefits were reversed. It doesn’t matter how good they get, or how interesting they are: the ultimate purpose of the industry behind them is to create less demand for labor and aggregate more wealth in fewer hands.

Unless you happen to be in a very very small club of ultra-wealthy tech bros, they’re not for you, they’re against you. #AI #LLMs #claude #chatgpt

@jzb@hachyderm.io

A thought that popped into my head when I woke up at 4 am and couldn’t get back to sleep…

Imagine that AI/LLM tools were being marketed to workers as a way to do the same work more quickly and work fewer hours without telling their employers.

“Use ChatGPT to write your TPS reports, go home at lunchtime. Spend more time with your kids!” “Use Claude to write your code, turn 60-hour weeks into four-day weekends!” “Collect two paychecks by using AI! You can hold two jobs without the boss knowing the difference!”

Imagine if AI/LLM tools were not shareholder catnip, but a grassroots movement of tooling that workers were sharing with each other to work less. Same quality of output, but instead of being pushed top-down, being adopted to empower people to work less and “cheat” employers.

Imagine if unions were arguing for the right of workers to use LLMs as labor saving devices, instead of trying to protect members from their damage.

CEOs would be screaming bloody murder. There’d be an overnight industry in AI-detection tools and immediate bans on AI in the workplace. Instead of Microsoft CoPilot 365, Satya would be out promoting Microsoft SlopGuard - add ons that detect LLM tools running on Windows and prevent AI scrapers from harvesting your company’s valuable content for training.

The media would be running horror stories about the terrible trend of workers getting the same pay for working less, and the awful quality of LLM output. Maybe they’d still call them “hallucinations,” but it’d be in the terrified tone of 80s anti-drug PSAs.

What I’m trying to say in my sleep-deprived state is that you shouldn’t ignore the intent and ill effects of these tools. If they were good for you, shareholders would hate them.

You should understand that they’re anti-worker and anti-human. TPTB would be fighting them tooth and nail if their benefits were reversed. It doesn’t matter how good they get, or how interesting they are: the ultimate purpose of the industry behind them is to create less demand for labor and aggregate more wealth in fewer hands.

Unless you happen to be in a very very small club of ultra-wealthy tech bros, they’re not for you, they’re against you. #AI #LLMs #claude #chatgpt

@jzb@hachyderm.io

A thought that popped into my head when I woke up at 4 am and couldn’t get back to sleep…

Imagine that AI/LLM tools were being marketed to workers as a way to do the same work more quickly and work fewer hours without telling their employers.

“Use ChatGPT to write your TPS reports, go home at lunchtime. Spend more time with your kids!” “Use Claude to write your code, turn 60-hour weeks into four-day weekends!” “Collect two paychecks by using AI! You can hold two jobs without the boss knowing the difference!”

Imagine if AI/LLM tools were not shareholder catnip, but a grassroots movement of tooling that workers were sharing with each other to work less. Same quality of output, but instead of being pushed top-down, being adopted to empower people to work less and “cheat” employers.

Imagine if unions were arguing for the right of workers to use LLMs as labor saving devices, instead of trying to protect members from their damage.

CEOs would be screaming bloody murder. There’d be an overnight industry in AI-detection tools and immediate bans on AI in the workplace. Instead of Microsoft CoPilot 365, Satya would be out promoting Microsoft SlopGuard - add ons that detect LLM tools running on Windows and prevent AI scrapers from harvesting your company’s valuable content for training.

The media would be running horror stories about the terrible trend of workers getting the same pay for working less, and the awful quality of LLM output. Maybe they’d still call them “hallucinations,” but it’d be in the terrified tone of 80s anti-drug PSAs.

What I’m trying to say in my sleep-deprived state is that you shouldn’t ignore the intent and ill effects of these tools. If they were good for you, shareholders would hate them.

You should understand that they’re anti-worker and anti-human. TPTB would be fighting them tooth and nail if their benefits were reversed. It doesn’t matter how good they get, or how interesting they are: the ultimate purpose of the industry behind them is to create less demand for labor and aggregate more wealth in fewer hands.

Unless you happen to be in a very very small club of ultra-wealthy tech bros, they’re not for you, they’re against you. #AI #LLMs #claude #chatgpt

@ojrask@piipitin.fi

@ojrask@piipitin.fi

@metin@graphics.social

So, now they know how real creators feel after having been ripped off by "AI"…

https://futurism.com/artificial-intelligence/ai-prompt-plagiarism-art

#tech #technology #BigTech #AI #ArtificialIntelligence #LLM #LLMs #MachineLearning #GenAI #generativeAI #AISlop #Meta #Google #NVIDIA #gemini #OpenAI #ChatGPT #anthropic #claude

@metin@graphics.social

So, now they know how real creators feel after having been ripped off by "AI"…

https://futurism.com/artificial-intelligence/ai-prompt-plagiarism-art

#tech #technology #BigTech #AI #ArtificialIntelligence #LLM #LLMs #MachineLearning #GenAI #generativeAI #AISlop #Meta #Google #NVIDIA #gemini #OpenAI #ChatGPT #anthropic #claude

@yvg@indieweb.social

Been experimenting with #zed + #claude a bit, a few things are very odd:

1. The quality of interactions I'm having with Claude inside Zed is of lower quality than in VSCode + Claude or in Cursor. It seems "dumber", assumes more, doesn't verify claims, just generally more nonsensical answers and needs more hand-holding.

2. It seems to consume a lot more tokens, I'm not sure why, I suspect they don't properly cache, don't compact, don't optimise input/outputs, etc. This is horrific.

@yvg@indieweb.social

Been experimenting with #zed + #claude a bit, a few things are very odd:

1. The quality of interactions I'm having with Claude inside Zed is of lower quality than in VSCode + Claude or in Cursor. It seems "dumber", assumes more, doesn't verify claims, just generally more nonsensical answers and needs more hand-holding.

2. It seems to consume a lot more tokens, I'm not sure why, I suspect they don't properly cache, don't compact, don't optimise input/outputs, etc. This is horrific.

@kerrick@ruby.social

I really need some better workflows and tools for AI-Assisted Development. I'm learning a lot of things I think might be good patterns, but it is still chaos.

Got any tips for me?

#LLM #VibeCoding #AI #SoftwareEngineering #ComputerProgramming #Ruby #Gemini #Claude #Antigravity #Amp #Ratatui #RatatuiRuby

@kerrick@ruby.social

I really need some better workflows and tools for AI-Assisted Development. I'm learning a lot of things I think might be good patterns, but it is still chaos.

Got any tips for me?

#LLM #VibeCoding #AI #SoftwareEngineering #ComputerProgramming #Ruby #Gemini #Claude #Antigravity #Amp #Ratatui #RatatuiRuby

@jasonkim@hackers.pub

이번 글에서는 지난글에 이어서 Claude가 도구를 사용하는 구체적인 방법을 알아본다. Claude가 사용할 수 있는 도구들의 목록은 Tools 섹션에 포함되어 있다. Tools 섹션에 대해서는 이전 글을 참고한다.

Tool Use는 Claude가 외부 도구(함수)를 호출하여 실제 작업을 수행할 수 있게 하는 메커니즘이다. Claude는 텍스트 생성만으로는 수행할 수 없는 작업들, 예를 들어 파일 읽기, 명령어 실행, 웹 검색 등을 도구를 통해 수행한다.

Claude에게 사용 가능한 도구들의 스키마를 알려주면 Claude는 사용자의 요청을 분석하여 적절한 도구를 선택하고 필요한 파라미터와 함께 도구 사용을 요청한다. 에이전트(클라이언트)는 이 요청을 받아 실제로 도구를 실행하고 그 결과를 다시 Claude에게 전달한다.

Claude가 도구를 사용하려면 먼저 어떤 도구가 있는지 알아야 한다. 에이전트는 API 요청의 tools 배열에 사용 가능한 도구들을 정의한다. 각 도구는 이름, 설명, 그리고 입력 스키마를 포함한다.

{

"name": "Bash",

"description": "Executes a given bash command in a persistent shell session with optional timeout, ensuring proper handling and security measures.\n\nIMPORTANT: This tool is for terminal operations like git, npm, docker, etc...",

"input_schema": {

"type": "object",

"properties": {

"command": {

"type": "string",

"description": "The command to execute"

},

"timeout": {

"type": "number",

"description": "Optional timeout in milliseconds (max 600000)"

},

"description": {

"type": "string",

"description": "Clear, concise description of what this command does in 5-10 words, in active voice."

}

},

"required": ["command"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}{

"name": "Glob",

"description": "- Fast file pattern matching tool that works with any codebase size\n- Supports glob patterns like \"**/*.js\" or \"src/**/*.ts\"\n- Returns matching file paths sorted by modification time\n- Use this tool when you need to find files by name patterns",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The glob pattern to match files against"

},

"path": {

"type": "string",

"description": "The directory to search in. If not specified, the current working directory will be used."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}도구 정의에서 description이 중요하다. Claude는 이 설명을 읽고 어떤 상황에서 해당 도구를 사용해야 하는지 판단한다. input_schema는 JSON Schema 형식으로 Claude가 도구를 호출할 때 어떤 파라미터를 어떤 형식으로 전달해야 하는지 정의한다.

Claude가 도구를 선택하는 과정은 Messages API의 대화 흐름 속에서 이루어진다. 실제 예시를 통해 살펴보자.

사용자가 "이 NestJS 프로젝트에서 entity 구조를 탐색해주세요"라고 요청하면 에이전트는 다음과 같은 메시지를 API에 전송한다:

{

"role": "user",

"content": [

{

"type": "text",

"text": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요..."

}

]

}Claude는 사용자의 요청을 분석하고 작업 수행에 필요한 도구들을 선택하여 tool_use 블록으로 응답한다:

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "이 NestJS 프로젝트의 entity 구조를 철저하게 탐색하겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01ABC123XYZ",

"name": "Glob",

"input": {

"pattern": "**/*.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01DEF456UVW",

"name": "Bash",

"input": {

"command": "find /workspace/my-nestjs-project/src -type f -name \"*.ts\" | grep -E \"(entity|entities)\" | head -20",

"description": "Find entity files in src directory"

}

}

]

}여기서 주목할 점이 있다. Claude는 한 번의 응답에서 여러 도구를 동시에 요청할 수 있다. 위 예시에서는 Glob과 Bash 두 도구를 병렬로 요청했다. 각 도구 요청에는 고유한 id가 부여되어 나중에 결과를 매핑할 때 사용된다.

Claude가 도구 사용을 요청하면 API 응답의 stop_reason이 "tool_use"로 설정된다:

{

"id": "msg_01XYZ789ABC",

"type": "message",

"role": "assistant",

"model": "claude-haiku-4-5-20251001",

"content": [...],

"stop_reason": "tool_use",

"usage": {

"input_tokens": 714,

"output_tokens": 314

}

}이 stop_reason은 에이전트에게 "응답이 끝난 것이 아니라 도구 실행이 필요하다"는 신호를 보낸다.

에이전트(클라이언트)가 stop_reason: "tool_use" 응답을 받으면 다음 단계를 수행해야 한다:

도구 요청 파싱: 응답의 content 배열에서 type: "tool_use" 블록들을 추출한다.

도구 실행: 각 도구 요청에 대해 실제 도구를 실행한다. 예를 들어:

Bash 도구 → 시스템에서 실제 bash 명령어 실행Glob 도구 → 파일 시스템에서 패턴 매칭 수행Read 도구 → 파일 내용 읽기결과 수집: 각 도구의 실행 결과를 수집하고 tool_use_id와 함께 결과를 구성한다.

모델에 결과 전달: 수집한 결과를 tool_result 형식으로 모델에 다시 전송한다.

이 과정에서 에이전트는 도구 실행의 성공/실패 여부, 타임아웃 처리, 보안 검증 등을 담당한다. Claude는 도구의 스키마와 용도만 알 뿐 실제 실행은 에이전트의 몫이다.

에이전트가 도구를 실행한 후에는 그 결과를 tool_result 형식으로 모델에 전달한다. 이 결과는 user role의 메시지로 전송된다.

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01DEF456UVW",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/chat/entities/dm-unlock.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-session.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"is_error": false

},

{

"tool_use_id": "toolu_01ABC123XYZ",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/agora/entities/agora-event-log.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-participant.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"cache_control": {

"type": "ephemeral"

}

}

]

}각 tool_result의 핵심 필드는 다음과 같다:

| 필드 | 설명 |

|---|---|

tool_use_id |

Claude가 요청한 도구의 고유 ID. 어떤 요청에 대한 결과인지 매핑 |

type |

항상 "tool_result" |

content |

도구 실행의 실제 결과 (문자열) |

is_error |

도구 실행 실패 시 true |

cache_control |

(선택) 프롬프트 캐싱을 위한 제어 옵션 |

tool_result를 받은 Claude는 결과를 분석하고 추가 도구가 필요하면 다시 tool_use를 요청한다. 충분한 정보가 모이면 최종 응답을 생성한다. 이 과정이 반복되면서 복잡한 작업도 단계별로 수행할 수 있다:

User → Claude: "entity 구조를 탐색해주세요"

Claude → Agent: tool_use (Glob, Bash)

Agent → Claude: tool_result (파일 목록)

Claude → Agent: tool_use (Read - 여러 파일)

Agent → Claude: tool_result (파일 내용들)

Claude → User: 최종 분석 결과실제 예시에서 Claude는 먼저 Glob과 Bash로 entity 파일 목록을 찾고 그 결과를 받은 후 Read 도구로 개별 파일들을 읽어 분석했다:

{

"type": "text",

"text": "좋습니다. 이제 주요 entity 파일들을 읽겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01GHI789RST",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01JKL012MNO",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts"

}

}Claude Code와 같은 에이전트는 모델에 사용할 수 있는 도구를 알려주어 도구를 능동적으로 사용하게 만듦으로써 유저의 실행환경과 상호 협력하여 도구를 실행한다. 유저에게 질문을 하는 AskUserQuestion도 도구이고 심지어 계획 모드를 빠져나가는 ExitPlanMode도 도구다.

MCP(Model Context Protocol) 서버가 제공하는 기능들도 결국 도구로 노출되며 Subagent 호출도 도구를 통해 이루어진다. Skills도 마찬가지다. 결국 Claude Code의 거의 모든 확장 기능은 Tool Use라는 하나의 메커니즘 위에서 동작한다.

이 구조를 이해하면 Claude Code가 어떻게 파일을 읽고, 코드를 실행하고, 웹을 검색하는지 명확해진다. 그리고 새로운 도구를 추가하거나 MCP 서버를 연동할 때도 같은 패턴이 적용된다는 것을 알 수 있다.

@oageo@c.osumiakari.jp

年末はこたつの中でClaudeを使い倒せ~~~!!!

Claudeの有料プランユーザー向けに、25年の年末は使用制限が2倍になるキャンペーン - osumiakari.jp

www.osumiakari.jp/articles/20251225-claudelimit2x-personalplan/ #news #ニュース #Claude #Anthropic

@manlycoffee@techhub.social

So, I'm trying to figure out what behaviour people prefer for editing a prompt in a chat view:

- Locally fork the thread (each fork can be browsed with arrow buttons at the bottom of the prompt message)

- Just edit the prompt and generate the immediate response, keeping rest of the thread the same

#AI #ChatGPT #Claude #ChatBot #ArtificialIntelligence #LLM #LargeLanguageModels

| Option | Voters |

|---|---|

| Fork thread locally (ChatGPT's default behaviour) | 2 (67%) |

| Only edit next response (remainder stays intact) | 1 (33%) |

@jasonkim@hackers.pub

이번 글에서는 지난글에 이어서 Claude가 도구를 사용하는 구체적인 방법을 알아본다. Claude가 사용할 수 있는 도구들의 목록은 Tools 섹션에 포함되어 있다. Tools 섹션에 대해서는 이전 글을 참고한다.

Tool Use는 Claude가 외부 도구(함수)를 호출하여 실제 작업을 수행할 수 있게 하는 메커니즘이다. Claude는 텍스트 생성만으로는 수행할 수 없는 작업들, 예를 들어 파일 읽기, 명령어 실행, 웹 검색 등을 도구를 통해 수행한다.

Claude에게 사용 가능한 도구들의 스키마를 알려주면 Claude는 사용자의 요청을 분석하여 적절한 도구를 선택하고 필요한 파라미터와 함께 도구 사용을 요청한다. 에이전트(클라이언트)는 이 요청을 받아 실제로 도구를 실행하고 그 결과를 다시 Claude에게 전달한다.

Claude가 도구를 사용하려면 먼저 어떤 도구가 있는지 알아야 한다. 에이전트는 API 요청의 tools 배열에 사용 가능한 도구들을 정의한다. 각 도구는 이름, 설명, 그리고 입력 스키마를 포함한다.

{

"name": "Bash",

"description": "Executes a given bash command in a persistent shell session with optional timeout, ensuring proper handling and security measures.\n\nIMPORTANT: This tool is for terminal operations like git, npm, docker, etc...",

"input_schema": {

"type": "object",

"properties": {

"command": {

"type": "string",

"description": "The command to execute"

},

"timeout": {

"type": "number",

"description": "Optional timeout in milliseconds (max 600000)"

},

"description": {

"type": "string",

"description": "Clear, concise description of what this command does in 5-10 words, in active voice."

}

},

"required": ["command"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}{

"name": "Glob",

"description": "- Fast file pattern matching tool that works with any codebase size\n- Supports glob patterns like \"**/*.js\" or \"src/**/*.ts\"\n- Returns matching file paths sorted by modification time\n- Use this tool when you need to find files by name patterns",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The glob pattern to match files against"

},

"path": {

"type": "string",

"description": "The directory to search in. If not specified, the current working directory will be used."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}도구 정의에서 description이 중요하다. Claude는 이 설명을 읽고 어떤 상황에서 해당 도구를 사용해야 하는지 판단한다. input_schema는 JSON Schema 형식으로 Claude가 도구를 호출할 때 어떤 파라미터를 어떤 형식으로 전달해야 하는지 정의한다.

Claude가 도구를 선택하는 과정은 Messages API의 대화 흐름 속에서 이루어진다. 실제 예시를 통해 살펴보자.

사용자가 "이 NestJS 프로젝트에서 entity 구조를 탐색해주세요"라고 요청하면 에이전트는 다음과 같은 메시지를 API에 전송한다:

{

"role": "user",

"content": [

{

"type": "text",

"text": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요..."

}

]

}Claude는 사용자의 요청을 분석하고 작업 수행에 필요한 도구들을 선택하여 tool_use 블록으로 응답한다:

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "이 NestJS 프로젝트의 entity 구조를 철저하게 탐색하겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01ABC123XYZ",

"name": "Glob",

"input": {

"pattern": "**/*.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01DEF456UVW",

"name": "Bash",

"input": {

"command": "find /workspace/my-nestjs-project/src -type f -name \"*.ts\" | grep -E \"(entity|entities)\" | head -20",

"description": "Find entity files in src directory"

}

}

]

}여기서 주목할 점이 있다. Claude는 한 번의 응답에서 여러 도구를 동시에 요청할 수 있다. 위 예시에서는 Glob과 Bash 두 도구를 병렬로 요청했다. 각 도구 요청에는 고유한 id가 부여되어 나중에 결과를 매핑할 때 사용된다.

Claude가 도구 사용을 요청하면 API 응답의 stop_reason이 "tool_use"로 설정된다:

{

"id": "msg_01XYZ789ABC",

"type": "message",

"role": "assistant",

"model": "claude-haiku-4-5-20251001",

"content": [...],

"stop_reason": "tool_use",

"usage": {

"input_tokens": 714,

"output_tokens": 314

}

}이 stop_reason은 에이전트에게 "응답이 끝난 것이 아니라 도구 실행이 필요하다"는 신호를 보낸다.

에이전트(클라이언트)가 stop_reason: "tool_use" 응답을 받으면 다음 단계를 수행해야 한다:

도구 요청 파싱: 응답의 content 배열에서 type: "tool_use" 블록들을 추출한다.

도구 실행: 각 도구 요청에 대해 실제 도구를 실행한다. 예를 들어:

Bash 도구 → 시스템에서 실제 bash 명령어 실행Glob 도구 → 파일 시스템에서 패턴 매칭 수행Read 도구 → 파일 내용 읽기결과 수집: 각 도구의 실행 결과를 수집하고 tool_use_id와 함께 결과를 구성한다.

모델에 결과 전달: 수집한 결과를 tool_result 형식으로 모델에 다시 전송한다.

이 과정에서 에이전트는 도구 실행의 성공/실패 여부, 타임아웃 처리, 보안 검증 등을 담당한다. Claude는 도구의 스키마와 용도만 알 뿐 실제 실행은 에이전트의 몫이다.

에이전트가 도구를 실행한 후에는 그 결과를 tool_result 형식으로 모델에 전달한다. 이 결과는 user role의 메시지로 전송된다.

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01DEF456UVW",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/chat/entities/dm-unlock.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-session.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"is_error": false

},

{

"tool_use_id": "toolu_01ABC123XYZ",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/agora/entities/agora-event-log.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-participant.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"cache_control": {

"type": "ephemeral"

}

}

]

}각 tool_result의 핵심 필드는 다음과 같다:

| 필드 | 설명 |

|---|---|

tool_use_id |

Claude가 요청한 도구의 고유 ID. 어떤 요청에 대한 결과인지 매핑 |

type |

항상 "tool_result" |

content |

도구 실행의 실제 결과 (문자열) |

is_error |

도구 실행 실패 시 true |

cache_control |

(선택) 프롬프트 캐싱을 위한 제어 옵션 |

tool_result를 받은 Claude는 결과를 분석하고 추가 도구가 필요하면 다시 tool_use를 요청한다. 충분한 정보가 모이면 최종 응답을 생성한다. 이 과정이 반복되면서 복잡한 작업도 단계별로 수행할 수 있다:

User → Claude: "entity 구조를 탐색해주세요"

Claude → Agent: tool_use (Glob, Bash)

Agent → Claude: tool_result (파일 목록)

Claude → Agent: tool_use (Read - 여러 파일)

Agent → Claude: tool_result (파일 내용들)

Claude → User: 최종 분석 결과실제 예시에서 Claude는 먼저 Glob과 Bash로 entity 파일 목록을 찾고 그 결과를 받은 후 Read 도구로 개별 파일들을 읽어 분석했다:

{

"type": "text",

"text": "좋습니다. 이제 주요 entity 파일들을 읽겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01GHI789RST",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01JKL012MNO",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts"

}

}Claude Code와 같은 에이전트는 모델에 사용할 수 있는 도구를 알려주어 도구를 능동적으로 사용하게 만듦으로써 유저의 실행환경과 상호 협력하여 도구를 실행한다. 유저에게 질문을 하는 AskUserQuestion도 도구이고 심지어 계획 모드를 빠져나가는 ExitPlanMode도 도구다.

MCP(Model Context Protocol) 서버가 제공하는 기능들도 결국 도구로 노출되며 Subagent 호출도 도구를 통해 이루어진다. Skills도 마찬가지다. 결국 Claude Code의 거의 모든 확장 기능은 Tool Use라는 하나의 메커니즘 위에서 동작한다.

이 구조를 이해하면 Claude Code가 어떻게 파일을 읽고, 코드를 실행하고, 웹을 검색하는지 명확해진다. 그리고 새로운 도구를 추가하거나 MCP 서버를 연동할 때도 같은 패턴이 적용된다는 것을 알 수 있다.

@jasonkim@hackers.pub

이번 글에서는 지난글에 이어서 Claude가 도구를 사용하는 구체적인 방법을 알아본다. Claude가 사용할 수 있는 도구들의 목록은 Tools 섹션에 포함되어 있다. Tools 섹션에 대해서는 이전 글을 참고한다.

Tool Use는 Claude가 외부 도구(함수)를 호출하여 실제 작업을 수행할 수 있게 하는 메커니즘이다. Claude는 텍스트 생성만으로는 수행할 수 없는 작업들, 예를 들어 파일 읽기, 명령어 실행, 웹 검색 등을 도구를 통해 수행한다.

Claude에게 사용 가능한 도구들의 스키마를 알려주면 Claude는 사용자의 요청을 분석하여 적절한 도구를 선택하고 필요한 파라미터와 함께 도구 사용을 요청한다. 에이전트(클라이언트)는 이 요청을 받아 실제로 도구를 실행하고 그 결과를 다시 Claude에게 전달한다.

Claude가 도구를 사용하려면 먼저 어떤 도구가 있는지 알아야 한다. 에이전트는 API 요청의 tools 배열에 사용 가능한 도구들을 정의한다. 각 도구는 이름, 설명, 그리고 입력 스키마를 포함한다.

{

"name": "Bash",

"description": "Executes a given bash command in a persistent shell session with optional timeout, ensuring proper handling and security measures.\n\nIMPORTANT: This tool is for terminal operations like git, npm, docker, etc...",

"input_schema": {

"type": "object",

"properties": {

"command": {

"type": "string",

"description": "The command to execute"

},

"timeout": {

"type": "number",

"description": "Optional timeout in milliseconds (max 600000)"

},

"description": {

"type": "string",

"description": "Clear, concise description of what this command does in 5-10 words, in active voice."

}

},

"required": ["command"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}{

"name": "Glob",

"description": "- Fast file pattern matching tool that works with any codebase size\n- Supports glob patterns like \"**/*.js\" or \"src/**/*.ts\"\n- Returns matching file paths sorted by modification time\n- Use this tool when you need to find files by name patterns",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The glob pattern to match files against"

},

"path": {

"type": "string",

"description": "The directory to search in. If not specified, the current working directory will be used."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}도구 정의에서 description이 중요하다. Claude는 이 설명을 읽고 어떤 상황에서 해당 도구를 사용해야 하는지 판단한다. input_schema는 JSON Schema 형식으로 Claude가 도구를 호출할 때 어떤 파라미터를 어떤 형식으로 전달해야 하는지 정의한다.

Claude가 도구를 선택하는 과정은 Messages API의 대화 흐름 속에서 이루어진다. 실제 예시를 통해 살펴보자.

사용자가 "이 NestJS 프로젝트에서 entity 구조를 탐색해주세요"라고 요청하면 에이전트는 다음과 같은 메시지를 API에 전송한다:

{

"role": "user",

"content": [

{

"type": "text",

"text": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요..."

}

]

}Claude는 사용자의 요청을 분석하고 작업 수행에 필요한 도구들을 선택하여 tool_use 블록으로 응답한다:

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "이 NestJS 프로젝트의 entity 구조를 철저하게 탐색하겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01ABC123XYZ",

"name": "Glob",

"input": {

"pattern": "**/*.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01DEF456UVW",

"name": "Bash",

"input": {

"command": "find /workspace/my-nestjs-project/src -type f -name \"*.ts\" | grep -E \"(entity|entities)\" | head -20",

"description": "Find entity files in src directory"

}

}

]

}여기서 주목할 점이 있다. Claude는 한 번의 응답에서 여러 도구를 동시에 요청할 수 있다. 위 예시에서는 Glob과 Bash 두 도구를 병렬로 요청했다. 각 도구 요청에는 고유한 id가 부여되어 나중에 결과를 매핑할 때 사용된다.

Claude가 도구 사용을 요청하면 API 응답의 stop_reason이 "tool_use"로 설정된다:

{

"id": "msg_01XYZ789ABC",

"type": "message",

"role": "assistant",

"model": "claude-haiku-4-5-20251001",

"content": [...],

"stop_reason": "tool_use",

"usage": {

"input_tokens": 714,

"output_tokens": 314

}

}이 stop_reason은 에이전트에게 "응답이 끝난 것이 아니라 도구 실행이 필요하다"는 신호를 보낸다.

에이전트(클라이언트)가 stop_reason: "tool_use" 응답을 받으면 다음 단계를 수행해야 한다:

도구 요청 파싱: 응답의 content 배열에서 type: "tool_use" 블록들을 추출한다.

도구 실행: 각 도구 요청에 대해 실제 도구를 실행한다. 예를 들어:

Bash 도구 → 시스템에서 실제 bash 명령어 실행Glob 도구 → 파일 시스템에서 패턴 매칭 수행Read 도구 → 파일 내용 읽기결과 수집: 각 도구의 실행 결과를 수집하고 tool_use_id와 함께 결과를 구성한다.

모델에 결과 전달: 수집한 결과를 tool_result 형식으로 모델에 다시 전송한다.

이 과정에서 에이전트는 도구 실행의 성공/실패 여부, 타임아웃 처리, 보안 검증 등을 담당한다. Claude는 도구의 스키마와 용도만 알 뿐 실제 실행은 에이전트의 몫이다.

에이전트가 도구를 실행한 후에는 그 결과를 tool_result 형식으로 모델에 전달한다. 이 결과는 user role의 메시지로 전송된다.

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01DEF456UVW",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/chat/entities/dm-unlock.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-session.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"is_error": false

},

{

"tool_use_id": "toolu_01ABC123XYZ",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/agora/entities/agora-event-log.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-participant.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"cache_control": {

"type": "ephemeral"

}

}

]

}각 tool_result의 핵심 필드는 다음과 같다:

| 필드 | 설명 |

|---|---|

tool_use_id |

Claude가 요청한 도구의 고유 ID. 어떤 요청에 대한 결과인지 매핑 |

type |

항상 "tool_result" |

content |

도구 실행의 실제 결과 (문자열) |

is_error |

도구 실행 실패 시 true |

cache_control |

(선택) 프롬프트 캐싱을 위한 제어 옵션 |

tool_result를 받은 Claude는 결과를 분석하고 추가 도구가 필요하면 다시 tool_use를 요청한다. 충분한 정보가 모이면 최종 응답을 생성한다. 이 과정이 반복되면서 복잡한 작업도 단계별로 수행할 수 있다:

User → Claude: "entity 구조를 탐색해주세요"

Claude → Agent: tool_use (Glob, Bash)

Agent → Claude: tool_result (파일 목록)

Claude → Agent: tool_use (Read - 여러 파일)

Agent → Claude: tool_result (파일 내용들)

Claude → User: 최종 분석 결과실제 예시에서 Claude는 먼저 Glob과 Bash로 entity 파일 목록을 찾고 그 결과를 받은 후 Read 도구로 개별 파일들을 읽어 분석했다:

{

"type": "text",

"text": "좋습니다. 이제 주요 entity 파일들을 읽겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01GHI789RST",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01JKL012MNO",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts"

}

}Claude Code와 같은 에이전트는 모델에 사용할 수 있는 도구를 알려주어 도구를 능동적으로 사용하게 만듦으로써 유저의 실행환경과 상호 협력하여 도구를 실행한다. 유저에게 질문을 하는 AskUserQuestion도 도구이고 심지어 계획 모드를 빠져나가는 ExitPlanMode도 도구다.

MCP(Model Context Protocol) 서버가 제공하는 기능들도 결국 도구로 노출되며 Subagent 호출도 도구를 통해 이루어진다. Skills도 마찬가지다. 결국 Claude Code의 거의 모든 확장 기능은 Tool Use라는 하나의 메커니즘 위에서 동작한다.

이 구조를 이해하면 Claude Code가 어떻게 파일을 읽고, 코드를 실행하고, 웹을 검색하는지 명확해진다. 그리고 새로운 도구를 추가하거나 MCP 서버를 연동할 때도 같은 패턴이 적용된다는 것을 알 수 있다.

@jasonkim@hackers.pub

이번 글에서는 지난글에 이어서 Claude가 도구를 사용하는 구체적인 방법을 알아본다. Claude가 사용할 수 있는 도구들의 목록은 Tools 섹션에 포함되어 있다. Tools 섹션에 대해서는 이전 글을 참고한다.

Tool Use는 Claude가 외부 도구(함수)를 호출하여 실제 작업을 수행할 수 있게 하는 메커니즘이다. Claude는 텍스트 생성만으로는 수행할 수 없는 작업들, 예를 들어 파일 읽기, 명령어 실행, 웹 검색 등을 도구를 통해 수행한다.

Claude에게 사용 가능한 도구들의 스키마를 알려주면 Claude는 사용자의 요청을 분석하여 적절한 도구를 선택하고 필요한 파라미터와 함께 도구 사용을 요청한다. 에이전트(클라이언트)는 이 요청을 받아 실제로 도구를 실행하고 그 결과를 다시 Claude에게 전달한다.

Claude가 도구를 사용하려면 먼저 어떤 도구가 있는지 알아야 한다. 에이전트는 API 요청의 tools 배열에 사용 가능한 도구들을 정의한다. 각 도구는 이름, 설명, 그리고 입력 스키마를 포함한다.

{

"name": "Bash",

"description": "Executes a given bash command in a persistent shell session with optional timeout, ensuring proper handling and security measures.\n\nIMPORTANT: This tool is for terminal operations like git, npm, docker, etc...",

"input_schema": {

"type": "object",

"properties": {

"command": {

"type": "string",

"description": "The command to execute"

},

"timeout": {

"type": "number",

"description": "Optional timeout in milliseconds (max 600000)"

},

"description": {

"type": "string",

"description": "Clear, concise description of what this command does in 5-10 words, in active voice."

}

},

"required": ["command"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}{

"name": "Glob",

"description": "- Fast file pattern matching tool that works with any codebase size\n- Supports glob patterns like \"**/*.js\" or \"src/**/*.ts\"\n- Returns matching file paths sorted by modification time\n- Use this tool when you need to find files by name patterns",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The glob pattern to match files against"

},

"path": {

"type": "string",

"description": "The directory to search in. If not specified, the current working directory will be used."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}도구 정의에서 description이 중요하다. Claude는 이 설명을 읽고 어떤 상황에서 해당 도구를 사용해야 하는지 판단한다. input_schema는 JSON Schema 형식으로 Claude가 도구를 호출할 때 어떤 파라미터를 어떤 형식으로 전달해야 하는지 정의한다.

Claude가 도구를 선택하는 과정은 Messages API의 대화 흐름 속에서 이루어진다. 실제 예시를 통해 살펴보자.

사용자가 "이 NestJS 프로젝트에서 entity 구조를 탐색해주세요"라고 요청하면 에이전트는 다음과 같은 메시지를 API에 전송한다:

{

"role": "user",

"content": [

{

"type": "text",

"text": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요..."

}

]

}Claude는 사용자의 요청을 분석하고 작업 수행에 필요한 도구들을 선택하여 tool_use 블록으로 응답한다:

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "이 NestJS 프로젝트의 entity 구조를 철저하게 탐색하겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01ABC123XYZ",

"name": "Glob",

"input": {

"pattern": "**/*.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01DEF456UVW",

"name": "Bash",

"input": {

"command": "find /workspace/my-nestjs-project/src -type f -name \"*.ts\" | grep -E \"(entity|entities)\" | head -20",

"description": "Find entity files in src directory"

}

}

]

}여기서 주목할 점이 있다. Claude는 한 번의 응답에서 여러 도구를 동시에 요청할 수 있다. 위 예시에서는 Glob과 Bash 두 도구를 병렬로 요청했다. 각 도구 요청에는 고유한 id가 부여되어 나중에 결과를 매핑할 때 사용된다.

Claude가 도구 사용을 요청하면 API 응답의 stop_reason이 "tool_use"로 설정된다:

{

"id": "msg_01XYZ789ABC",

"type": "message",

"role": "assistant",

"model": "claude-haiku-4-5-20251001",

"content": [...],

"stop_reason": "tool_use",

"usage": {

"input_tokens": 714,

"output_tokens": 314

}

}이 stop_reason은 에이전트에게 "응답이 끝난 것이 아니라 도구 실행이 필요하다"는 신호를 보낸다.

에이전트(클라이언트)가 stop_reason: "tool_use" 응답을 받으면 다음 단계를 수행해야 한다:

도구 요청 파싱: 응답의 content 배열에서 type: "tool_use" 블록들을 추출한다.

도구 실행: 각 도구 요청에 대해 실제 도구를 실행한다. 예를 들어:

Bash 도구 → 시스템에서 실제 bash 명령어 실행Glob 도구 → 파일 시스템에서 패턴 매칭 수행Read 도구 → 파일 내용 읽기결과 수집: 각 도구의 실행 결과를 수집하고 tool_use_id와 함께 결과를 구성한다.

모델에 결과 전달: 수집한 결과를 tool_result 형식으로 모델에 다시 전송한다.

이 과정에서 에이전트는 도구 실행의 성공/실패 여부, 타임아웃 처리, 보안 검증 등을 담당한다. Claude는 도구의 스키마와 용도만 알 뿐 실제 실행은 에이전트의 몫이다.

에이전트가 도구를 실행한 후에는 그 결과를 tool_result 형식으로 모델에 전달한다. 이 결과는 user role의 메시지로 전송된다.

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01DEF456UVW",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/chat/entities/dm-unlock.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-session.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"is_error": false

},

{

"tool_use_id": "toolu_01ABC123XYZ",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/agora/entities/agora-event-log.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-participant.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"cache_control": {

"type": "ephemeral"

}

}

]

}각 tool_result의 핵심 필드는 다음과 같다:

| 필드 | 설명 |

|---|---|

tool_use_id |

Claude가 요청한 도구의 고유 ID. 어떤 요청에 대한 결과인지 매핑 |

type |

항상 "tool_result" |

content |

도구 실행의 실제 결과 (문자열) |

is_error |

도구 실행 실패 시 true |

cache_control |

(선택) 프롬프트 캐싱을 위한 제어 옵션 |

tool_result를 받은 Claude는 결과를 분석하고 추가 도구가 필요하면 다시 tool_use를 요청한다. 충분한 정보가 모이면 최종 응답을 생성한다. 이 과정이 반복되면서 복잡한 작업도 단계별로 수행할 수 있다:

User → Claude: "entity 구조를 탐색해주세요"

Claude → Agent: tool_use (Glob, Bash)

Agent → Claude: tool_result (파일 목록)

Claude → Agent: tool_use (Read - 여러 파일)

Agent → Claude: tool_result (파일 내용들)

Claude → User: 최종 분석 결과실제 예시에서 Claude는 먼저 Glob과 Bash로 entity 파일 목록을 찾고 그 결과를 받은 후 Read 도구로 개별 파일들을 읽어 분석했다:

{

"type": "text",

"text": "좋습니다. 이제 주요 entity 파일들을 읽겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01GHI789RST",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01JKL012MNO",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts"

}

}Claude Code와 같은 에이전트는 모델에 사용할 수 있는 도구를 알려주어 도구를 능동적으로 사용하게 만듦으로써 유저의 실행환경과 상호 협력하여 도구를 실행한다. 유저에게 질문을 하는 AskUserQuestion도 도구이고 심지어 계획 모드를 빠져나가는 ExitPlanMode도 도구다.

MCP(Model Context Protocol) 서버가 제공하는 기능들도 결국 도구로 노출되며 Subagent 호출도 도구를 통해 이루어진다. Skills도 마찬가지다. 결국 Claude Code의 거의 모든 확장 기능은 Tool Use라는 하나의 메커니즘 위에서 동작한다.

이 구조를 이해하면 Claude Code가 어떻게 파일을 읽고, 코드를 실행하고, 웹을 검색하는지 명확해진다. 그리고 새로운 도구를 추가하거나 MCP 서버를 연동할 때도 같은 패턴이 적용된다는 것을 알 수 있다.

@jasonkim@hackers.pub

이번 글에서는 지난글에 이어서 Claude가 도구를 사용하는 구체적인 방법을 알아본다. Claude가 사용할 수 있는 도구들의 목록은 Tools 섹션에 포함되어 있다. Tools 섹션에 대해서는 이전 글을 참고한다.

Tool Use는 Claude가 외부 도구(함수)를 호출하여 실제 작업을 수행할 수 있게 하는 메커니즘이다. Claude는 텍스트 생성만으로는 수행할 수 없는 작업들, 예를 들어 파일 읽기, 명령어 실행, 웹 검색 등을 도구를 통해 수행한다.

Claude에게 사용 가능한 도구들의 스키마를 알려주면 Claude는 사용자의 요청을 분석하여 적절한 도구를 선택하고 필요한 파라미터와 함께 도구 사용을 요청한다. 에이전트(클라이언트)는 이 요청을 받아 실제로 도구를 실행하고 그 결과를 다시 Claude에게 전달한다.

Claude가 도구를 사용하려면 먼저 어떤 도구가 있는지 알아야 한다. 에이전트는 API 요청의 tools 배열에 사용 가능한 도구들을 정의한다. 각 도구는 이름, 설명, 그리고 입력 스키마를 포함한다.

{

"name": "Bash",

"description": "Executes a given bash command in a persistent shell session with optional timeout, ensuring proper handling and security measures.\n\nIMPORTANT: This tool is for terminal operations like git, npm, docker, etc...",

"input_schema": {

"type": "object",

"properties": {

"command": {

"type": "string",

"description": "The command to execute"

},

"timeout": {

"type": "number",

"description": "Optional timeout in milliseconds (max 600000)"

},

"description": {

"type": "string",

"description": "Clear, concise description of what this command does in 5-10 words, in active voice."

}

},

"required": ["command"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}{

"name": "Glob",

"description": "- Fast file pattern matching tool that works with any codebase size\n- Supports glob patterns like \"**/*.js\" or \"src/**/*.ts\"\n- Returns matching file paths sorted by modification time\n- Use this tool when you need to find files by name patterns",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The glob pattern to match files against"

},

"path": {

"type": "string",

"description": "The directory to search in. If not specified, the current working directory will be used."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}도구 정의에서 description이 중요하다. Claude는 이 설명을 읽고 어떤 상황에서 해당 도구를 사용해야 하는지 판단한다. input_schema는 JSON Schema 형식으로 Claude가 도구를 호출할 때 어떤 파라미터를 어떤 형식으로 전달해야 하는지 정의한다.

Claude가 도구를 선택하는 과정은 Messages API의 대화 흐름 속에서 이루어진다. 실제 예시를 통해 살펴보자.

사용자가 "이 NestJS 프로젝트에서 entity 구조를 탐색해주세요"라고 요청하면 에이전트는 다음과 같은 메시지를 API에 전송한다:

{

"role": "user",

"content": [

{

"type": "text",

"text": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요..."

}

]

}Claude는 사용자의 요청을 분석하고 작업 수행에 필요한 도구들을 선택하여 tool_use 블록으로 응답한다:

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "이 NestJS 프로젝트의 entity 구조를 철저하게 탐색하겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01ABC123XYZ",

"name": "Glob",

"input": {

"pattern": "**/*.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01DEF456UVW",

"name": "Bash",

"input": {

"command": "find /workspace/my-nestjs-project/src -type f -name \"*.ts\" | grep -E \"(entity|entities)\" | head -20",

"description": "Find entity files in src directory"

}

}

]

}여기서 주목할 점이 있다. Claude는 한 번의 응답에서 여러 도구를 동시에 요청할 수 있다. 위 예시에서는 Glob과 Bash 두 도구를 병렬로 요청했다. 각 도구 요청에는 고유한 id가 부여되어 나중에 결과를 매핑할 때 사용된다.

Claude가 도구 사용을 요청하면 API 응답의 stop_reason이 "tool_use"로 설정된다:

{

"id": "msg_01XYZ789ABC",

"type": "message",

"role": "assistant",

"model": "claude-haiku-4-5-20251001",

"content": [...],

"stop_reason": "tool_use",

"usage": {

"input_tokens": 714,

"output_tokens": 314

}

}이 stop_reason은 에이전트에게 "응답이 끝난 것이 아니라 도구 실행이 필요하다"는 신호를 보낸다.

에이전트(클라이언트)가 stop_reason: "tool_use" 응답을 받으면 다음 단계를 수행해야 한다:

도구 요청 파싱: 응답의 content 배열에서 type: "tool_use" 블록들을 추출한다.

도구 실행: 각 도구 요청에 대해 실제 도구를 실행한다. 예를 들어:

Bash 도구 → 시스템에서 실제 bash 명령어 실행Glob 도구 → 파일 시스템에서 패턴 매칭 수행Read 도구 → 파일 내용 읽기결과 수집: 각 도구의 실행 결과를 수집하고 tool_use_id와 함께 결과를 구성한다.

모델에 결과 전달: 수집한 결과를 tool_result 형식으로 모델에 다시 전송한다.

이 과정에서 에이전트는 도구 실행의 성공/실패 여부, 타임아웃 처리, 보안 검증 등을 담당한다. Claude는 도구의 스키마와 용도만 알 뿐 실제 실행은 에이전트의 몫이다.

에이전트가 도구를 실행한 후에는 그 결과를 tool_result 형식으로 모델에 전달한다. 이 결과는 user role의 메시지로 전송된다.

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01DEF456UVW",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/chat/entities/dm-unlock.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-session.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts\n/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"is_error": false

},

{

"tool_use_id": "toolu_01ABC123XYZ",

"type": "tool_result",

"content": "/workspace/my-nestjs-project/src/modules/agora/entities/agora-event-log.entity.ts\n/workspace/my-nestjs-project/src/modules/agora/entities/call-participant.entity.ts\n/workspace/my-nestjs-project/src/modules/item/entities/item.entity.ts\n...",

"cache_control": {

"type": "ephemeral"

}

}

]

}각 tool_result의 핵심 필드는 다음과 같다:

| 필드 | 설명 |

|---|---|

tool_use_id |

Claude가 요청한 도구의 고유 ID. 어떤 요청에 대한 결과인지 매핑 |

type |

항상 "tool_result" |

content |

도구 실행의 실제 결과 (문자열) |

is_error |

도구 실행 실패 시 true |

cache_control |

(선택) 프롬프트 캐싱을 위한 제어 옵션 |

tool_result를 받은 Claude는 결과를 분석하고 추가 도구가 필요하면 다시 tool_use를 요청한다. 충분한 정보가 모이면 최종 응답을 생성한다. 이 과정이 반복되면서 복잡한 작업도 단계별로 수행할 수 있다:

User → Claude: "entity 구조를 탐색해주세요"

Claude → Agent: tool_use (Glob, Bash)

Agent → Claude: tool_result (파일 목록)

Claude → Agent: tool_use (Read - 여러 파일)

Agent → Claude: tool_result (파일 내용들)

Claude → User: 최종 분석 결과실제 예시에서 Claude는 먼저 Glob과 Bash로 entity 파일 목록을 찾고 그 결과를 받은 후 Read 도구로 개별 파일들을 읽어 분석했다:

{

"type": "text",

"text": "좋습니다. 이제 주요 entity 파일들을 읽겠습니다."

},

{

"type": "tool_use",

"id": "toolu_01GHI789RST",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user.entity.ts"

}

},

{

"type": "tool_use",

"id": "toolu_01JKL012MNO",

"name": "Read",

"input": {

"file_path": "/workspace/my-nestjs-project/src/modules/user/entities/user-profile.entity.ts"

}

}Claude Code와 같은 에이전트는 모델에 사용할 수 있는 도구를 알려주어 도구를 능동적으로 사용하게 만듦으로써 유저의 실행환경과 상호 협력하여 도구를 실행한다. 유저에게 질문을 하는 AskUserQuestion도 도구이고 심지어 계획 모드를 빠져나가는 ExitPlanMode도 도구다.

MCP(Model Context Protocol) 서버가 제공하는 기능들도 결국 도구로 노출되며 Subagent 호출도 도구를 통해 이루어진다. Skills도 마찬가지다. 결국 Claude Code의 거의 모든 확장 기능은 Tool Use라는 하나의 메커니즘 위에서 동작한다.

이 구조를 이해하면 Claude Code가 어떻게 파일을 읽고, 코드를 실행하고, 웹을 검색하는지 명확해진다. 그리고 새로운 도구를 추가하거나 MCP 서버를 연동할 때도 같은 패턴이 적용된다는 것을 알 수 있다.

@jasonkim@hackers.pub

Claude API의 Request는 크게 4가지 분류를 가지고 있다.

각각은 다음과 같은 역할을 한다.

System Messages는 Claude에게 역할, 성격, 제약사항 등을 지시하는 최상위 설정이다. 배열 형태로 여러 개의 시스템 메시지를 전달할 수 있다.

"system": [

{

"type": "text",

"text": "You are Claude Code, Anthropic's official CLI for Claude.",

"cache_control": {

"type": "ephemeral"

}

},

{

"type": "text",

"text": "You are an interactive CLI tool that helps users with software engineering tasks...",

"cache_control": {

"type": "ephemeral"

}

}

]System Messages에는 다음과 같은 내용이 포함된다:

cache_control을 통한 캐싱 설정Messages는 user와 assistant 역할이 번갈아가며 주고받은 대화를 누적하는 배열이다. assistant 메시지는 반드시 모델의 실제 응답일 필요가 없다. 이를 활요해 API 호출 시 assistant 메시지를 미리 작성해서 전달하면, Claude는 그 내용 이후부터 이어서 응답한다. 이를 Prefill 기법이라 한다.

이 대화 기록을 통해 Claude는 맥락을 유지하며 응답한다.

"messages": [

{

"role": "user",

"content": [...]

},

{

"role": "assistant",

"content": [...]

},

{

"role": "user",

"content": [...]

}

]User의 content는 주로 두 가지 type으로 구성된다:

1. text - 사용자의 일반 메시지나 시스템 리마인더

{

"role": "user",

"content": [

{

"type": "text",

"text": "선물을 주고받는 기능을 위한 entity를 설계하라."

}

]

}2. tool_result - Tool 실행 결과 반환

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01Qj7gnFLKWBNjg",

"type": "tool_result",

"content": [

{

"type": "text",

"text": "## Entity 구조 탐색 보고서\n\n철저한 탐색을 통해..."

}

]

}

]

}Assistant의 content는 주로 세 가지 type으로 구성된다:

1. text - Claude의 응답 메시지

{

"type": "text",

"text": "선물 주고받기 기능을 위한 entity 설계를 시작하겠습니다."

}2. thinking - Extended Thinking 기능 활성화 시 사고 과정 (signature로 검증)

{

"type": "thinking",

"thinking": "사용자가 선물을 주고받는 기능을 위한 entity 설계를 요청했습니다...",

"signature": "EqskYIChgCKknyFYp5cu1zhVOp7kFTJb..."

}3. tool_use - Tool 호출 요청

{

"type": "tool_use",

"id": "toolu_01Qj7gn6vLKCNjg",

"name": "Task",

"input": {

"subagent_type": "Explore",

"prompt": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요...",

"description": "Entity 구조 탐색"

}

}Tool 사용 흐름은 다음과 같이 진행된다:

tool_use로 Tool 호출 요청tool_result로 실행 결과 반환text 응답 또는 추가 tool_use이 과정에서 어떤 Tool을 사용할 수 있는지는 tools 배열이 정의한다.

Tools는 Claude가 사용할 수 있는 도구들을 정의하는 배열이다. 각 Tool은 name, description, input_schema 세 가지 필드로 구성된다.

"tools": [

{

"name": "ToolName",

"description": "Tool에 대한 설명...",

"input_schema": {

"type": "object",

"properties": {...},

"required": [...],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}

]| 필드 | 설명 |

|---|---|

name |

Tool의 고유 식별자. Claude가 tool_use에서 이 이름으로 호출 |

description |

Tool의 용도, 사용법, 주의사항 등을 상세히 기술. Claude가 어떤 Tool을 선택할지 판단하는 근거 |

input_schema |

JSON Schema 형식으로 입력 파라미터 정의 |

input_schema는 JSON Schema draft-07 스펙을 따르며, Tool 호출 시 필요한 파라미터를 정의한다.

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for"

},

"path": {

"type": "string",

"description": "File or directory to search in. Defaults to current working directory."

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode: 'content' shows matching lines, 'files_with_matches' shows file paths..."

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"head_limit": {

"type": "number",

"description": "Limit output to first N lines/entries"

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}각 파라미터는 다음 필드들로 정의된다:

| 필드 | 설명 |

|---|---|

type |

데이터 타입 (string, number, boolean, array, object 등) |

description |

파라미터의 용도와 사용법 설명 |

enum |

(선택) 허용되는 값의 목록. 이 중 하나만 선택 가능 |

default |

(선택) 기본값 |

| 필드 | 설명 |

|---|---|

type |

항상 "object" |

properties |

파라미터 정의 객체 |

required |

필수 파라미터 이름 배열. 여기 포함되지 않은 파라미터는 선택적 |

additionalProperties |

false면 정의되지 않은 파라미터 전달 불가 |

$schema |

JSON Schema 버전 명시 |

{

"name": "Grep",

"description": "A powerful search tool built on ripgrep\n\n Usage:\n - ALWAYS use Grep for search tasks...",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for in file contents"

},

"path": {

"type": "string",

"description": "File or directory to search in (rg PATH). Defaults to current working directory."

},

"glob": {

"type": "string",

"description": "Glob pattern to filter files (e.g. \"*.js\", \"*.{ts,tsx}\")"

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode. Defaults to 'files_with_matches'."

},

"-A": {

"type": "number",

"description": "Number of lines to show after each match"

},

"-B": {

"type": "number",

"description": "Number of lines to show before each match"

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"multiline": {

"type": "boolean",

"description": "Enable multiline mode. Default: false."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}이 Tool을 Claude가 호출할 때의 tool_use:

{

"type": "tool_use",

"id": "toolu_01ABC123",

"name": "Grep",

"input": {

"pattern": "class.*Entity",

"path": "src/modules",

"glob": "*.ts",

"output_mode": "content",

"-i": true

}

}required에 pattern만 있으므로 나머지는 선택적이다. Claude는 input_schema의 description을 참고하여 적절한 파라미터를 선택한다.

마지막으로 모델 선택과 각종 설정 옵션들이다:

{

"model": "claude-opus-4-5-20251101",

"max_tokens": 32000,

"thinking": {

"budget_tokens": 31999,

"type": "enabled"

},

"stream": true,

"metadata": {

"user_id": "user_2f2ce5dbb94ac27c8da0d0b28dddf815fc82be54e0..."

}

}| 옵션 | 설명 |

|---|---|

model |

사용할 Claude 모델 (claude-opus-4-5, claude-sonnet-4-5 등) |

max_tokens |

최대 출력 토큰 수 |

thinking |

Extended Thinking 설정 (budget_tokens로 사고 토큰 예산 설정) |

stream |

스트리밍 응답 여부 |

metadata |

사용자 ID 등 메타데이터 |

지금까지 Claude API Request Body의 4가지 핵심 구성 요소를 살펴보았다:

이 구조를 이해하면 Claude API를 더 효과적으로 활용할 수 있다.

@jasonkim@hackers.pub

Claude API의 Request는 크게 4가지 분류를 가지고 있다.

각각은 다음과 같은 역할을 한다.

System Messages는 Claude에게 역할, 성격, 제약사항 등을 지시하는 최상위 설정이다. 배열 형태로 여러 개의 시스템 메시지를 전달할 수 있다.

"system": [

{

"type": "text",

"text": "You are Claude Code, Anthropic's official CLI for Claude.",

"cache_control": {

"type": "ephemeral"

}

},

{

"type": "text",

"text": "You are an interactive CLI tool that helps users with software engineering tasks...",

"cache_control": {

"type": "ephemeral"

}

}

]System Messages에는 다음과 같은 내용이 포함된다:

cache_control을 통한 캐싱 설정Messages는 user와 assistant 역할이 번갈아가며 주고받은 대화를 누적하는 배열이다. assistant 메시지는 반드시 모델의 실제 응답일 필요가 없다. 이를 활요해 API 호출 시 assistant 메시지를 미리 작성해서 전달하면, Claude는 그 내용 이후부터 이어서 응답한다. 이를 Prefill 기법이라 한다.

이 대화 기록을 통해 Claude는 맥락을 유지하며 응답한다.

"messages": [

{

"role": "user",

"content": [...]

},

{

"role": "assistant",

"content": [...]

},

{

"role": "user",

"content": [...]

}

]User의 content는 주로 두 가지 type으로 구성된다:

1. text - 사용자의 일반 메시지나 시스템 리마인더

{

"role": "user",

"content": [

{

"type": "text",

"text": "선물을 주고받는 기능을 위한 entity를 설계하라."

}

]

}2. tool_result - Tool 실행 결과 반환

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01Qj7gnFLKWBNjg",

"type": "tool_result",

"content": [

{

"type": "text",

"text": "## Entity 구조 탐색 보고서\n\n철저한 탐색을 통해..."

}

]

}

]

}Assistant의 content는 주로 세 가지 type으로 구성된다:

1. text - Claude의 응답 메시지

{

"type": "text",

"text": "선물 주고받기 기능을 위한 entity 설계를 시작하겠습니다."

}2. thinking - Extended Thinking 기능 활성화 시 사고 과정 (signature로 검증)

{

"type": "thinking",

"thinking": "사용자가 선물을 주고받는 기능을 위한 entity 설계를 요청했습니다...",

"signature": "EqskYIChgCKknyFYp5cu1zhVOp7kFTJb..."

}3. tool_use - Tool 호출 요청

{

"type": "tool_use",

"id": "toolu_01Qj7gn6vLKCNjg",

"name": "Task",

"input": {

"subagent_type": "Explore",

"prompt": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요...",

"description": "Entity 구조 탐색"

}

}Tool 사용 흐름은 다음과 같이 진행된다:

tool_use로 Tool 호출 요청tool_result로 실행 결과 반환text 응답 또는 추가 tool_use이 과정에서 어떤 Tool을 사용할 수 있는지는 tools 배열이 정의한다.

Tools는 Claude가 사용할 수 있는 도구들을 정의하는 배열이다. 각 Tool은 name, description, input_schema 세 가지 필드로 구성된다.

"tools": [

{

"name": "ToolName",

"description": "Tool에 대한 설명...",

"input_schema": {

"type": "object",

"properties": {...},

"required": [...],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}

]| 필드 | 설명 |

|---|---|

name |

Tool의 고유 식별자. Claude가 tool_use에서 이 이름으로 호출 |

description |

Tool의 용도, 사용법, 주의사항 등을 상세히 기술. Claude가 어떤 Tool을 선택할지 판단하는 근거 |

input_schema |

JSON Schema 형식으로 입력 파라미터 정의 |

input_schema는 JSON Schema draft-07 스펙을 따르며, Tool 호출 시 필요한 파라미터를 정의한다.

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for"

},

"path": {

"type": "string",

"description": "File or directory to search in. Defaults to current working directory."

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode: 'content' shows matching lines, 'files_with_matches' shows file paths..."

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"head_limit": {

"type": "number",

"description": "Limit output to first N lines/entries"

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}각 파라미터는 다음 필드들로 정의된다:

| 필드 | 설명 |

|---|---|

type |

데이터 타입 (string, number, boolean, array, object 등) |

description |

파라미터의 용도와 사용법 설명 |

enum |

(선택) 허용되는 값의 목록. 이 중 하나만 선택 가능 |

default |

(선택) 기본값 |

| 필드 | 설명 |

|---|---|

type |

항상 "object" |

properties |

파라미터 정의 객체 |

required |

필수 파라미터 이름 배열. 여기 포함되지 않은 파라미터는 선택적 |

additionalProperties |

false면 정의되지 않은 파라미터 전달 불가 |

$schema |

JSON Schema 버전 명시 |

{

"name": "Grep",

"description": "A powerful search tool built on ripgrep\n\n Usage:\n - ALWAYS use Grep for search tasks...",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for in file contents"

},

"path": {

"type": "string",

"description": "File or directory to search in (rg PATH). Defaults to current working directory."

},

"glob": {

"type": "string",

"description": "Glob pattern to filter files (e.g. \"*.js\", \"*.{ts,tsx}\")"

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode. Defaults to 'files_with_matches'."

},

"-A": {

"type": "number",

"description": "Number of lines to show after each match"

},

"-B": {

"type": "number",

"description": "Number of lines to show before each match"

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"multiline": {

"type": "boolean",

"description": "Enable multiline mode. Default: false."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}이 Tool을 Claude가 호출할 때의 tool_use:

{

"type": "tool_use",

"id": "toolu_01ABC123",

"name": "Grep",

"input": {

"pattern": "class.*Entity",

"path": "src/modules",

"glob": "*.ts",

"output_mode": "content",

"-i": true

}

}required에 pattern만 있으므로 나머지는 선택적이다. Claude는 input_schema의 description을 참고하여 적절한 파라미터를 선택한다.

마지막으로 모델 선택과 각종 설정 옵션들이다:

{

"model": "claude-opus-4-5-20251101",

"max_tokens": 32000,

"thinking": {

"budget_tokens": 31999,

"type": "enabled"

},

"stream": true,

"metadata": {

"user_id": "user_2f2ce5dbb94ac27c8da0d0b28dddf815fc82be54e0..."

}

}| 옵션 | 설명 |

|---|---|

model |

사용할 Claude 모델 (claude-opus-4-5, claude-sonnet-4-5 등) |

max_tokens |

최대 출력 토큰 수 |

thinking |

Extended Thinking 설정 (budget_tokens로 사고 토큰 예산 설정) |

stream |

스트리밍 응답 여부 |

metadata |

사용자 ID 등 메타데이터 |

지금까지 Claude API Request Body의 4가지 핵심 구성 요소를 살펴보았다:

이 구조를 이해하면 Claude API를 더 효과적으로 활용할 수 있다.

@jasonkim@hackers.pub

Claude API의 Request는 크게 4가지 분류를 가지고 있다.

각각은 다음과 같은 역할을 한다.

System Messages는 Claude에게 역할, 성격, 제약사항 등을 지시하는 최상위 설정이다. 배열 형태로 여러 개의 시스템 메시지를 전달할 수 있다.

"system": [

{

"type": "text",

"text": "You are Claude Code, Anthropic's official CLI for Claude.",

"cache_control": {

"type": "ephemeral"

}

},

{

"type": "text",

"text": "You are an interactive CLI tool that helps users with software engineering tasks...",

"cache_control": {

"type": "ephemeral"

}

}

]System Messages에는 다음과 같은 내용이 포함된다:

cache_control을 통한 캐싱 설정Messages는 user와 assistant 역할이 번갈아가며 주고받은 대화를 누적하는 배열이다. assistant 메시지는 반드시 모델의 실제 응답일 필요가 없다. 이를 활요해 API 호출 시 assistant 메시지를 미리 작성해서 전달하면, Claude는 그 내용 이후부터 이어서 응답한다. 이를 Prefill 기법이라 한다.

이 대화 기록을 통해 Claude는 맥락을 유지하며 응답한다.

"messages": [

{

"role": "user",

"content": [...]

},

{

"role": "assistant",

"content": [...]

},

{

"role": "user",

"content": [...]

}

]User의 content는 주로 두 가지 type으로 구성된다:

1. text - 사용자의 일반 메시지나 시스템 리마인더

{

"role": "user",

"content": [

{

"type": "text",

"text": "선물을 주고받는 기능을 위한 entity를 설계하라."

}

]

}2. tool_result - Tool 실행 결과 반환

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01Qj7gnFLKWBNjg",

"type": "tool_result",

"content": [

{

"type": "text",

"text": "## Entity 구조 탐색 보고서\n\n철저한 탐색을 통해..."

}

]

}

]

}Assistant의 content는 주로 세 가지 type으로 구성된다:

1. text - Claude의 응답 메시지

{

"type": "text",

"text": "선물 주고받기 기능을 위한 entity 설계를 시작하겠습니다."

}2. thinking - Extended Thinking 기능 활성화 시 사고 과정 (signature로 검증)

{

"type": "thinking",

"thinking": "사용자가 선물을 주고받는 기능을 위한 entity 설계를 요청했습니다...",

"signature": "EqskYIChgCKknyFYp5cu1zhVOp7kFTJb..."

}3. tool_use - Tool 호출 요청

{

"type": "tool_use",

"id": "toolu_01Qj7gn6vLKCNjg",

"name": "Task",

"input": {

"subagent_type": "Explore",

"prompt": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요...",

"description": "Entity 구조 탐색"

}

}Tool 사용 흐름은 다음과 같이 진행된다:

tool_use로 Tool 호출 요청tool_result로 실행 결과 반환text 응답 또는 추가 tool_use이 과정에서 어떤 Tool을 사용할 수 있는지는 tools 배열이 정의한다.

Tools는 Claude가 사용할 수 있는 도구들을 정의하는 배열이다. 각 Tool은 name, description, input_schema 세 가지 필드로 구성된다.

"tools": [

{

"name": "ToolName",

"description": "Tool에 대한 설명...",

"input_schema": {

"type": "object",

"properties": {...},

"required": [...],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}

]| 필드 | 설명 |

|---|---|

name |

Tool의 고유 식별자. Claude가 tool_use에서 이 이름으로 호출 |

description |

Tool의 용도, 사용법, 주의사항 등을 상세히 기술. Claude가 어떤 Tool을 선택할지 판단하는 근거 |

input_schema |

JSON Schema 형식으로 입력 파라미터 정의 |

input_schema는 JSON Schema draft-07 스펙을 따르며, Tool 호출 시 필요한 파라미터를 정의한다.

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for"

},

"path": {

"type": "string",

"description": "File or directory to search in. Defaults to current working directory."

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode: 'content' shows matching lines, 'files_with_matches' shows file paths..."

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"head_limit": {

"type": "number",

"description": "Limit output to first N lines/entries"

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}각 파라미터는 다음 필드들로 정의된다:

| 필드 | 설명 |

|---|---|

type |

데이터 타입 (string, number, boolean, array, object 등) |

description |

파라미터의 용도와 사용법 설명 |

enum |

(선택) 허용되는 값의 목록. 이 중 하나만 선택 가능 |

default |

(선택) 기본값 |

| 필드 | 설명 |

|---|---|

type |

항상 "object" |

properties |

파라미터 정의 객체 |

required |

필수 파라미터 이름 배열. 여기 포함되지 않은 파라미터는 선택적 |

additionalProperties |

false면 정의되지 않은 파라미터 전달 불가 |

$schema |

JSON Schema 버전 명시 |

{

"name": "Grep",

"description": "A powerful search tool built on ripgrep\n\n Usage:\n - ALWAYS use Grep for search tasks...",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for in file contents"

},

"path": {

"type": "string",

"description": "File or directory to search in (rg PATH). Defaults to current working directory."

},

"glob": {

"type": "string",

"description": "Glob pattern to filter files (e.g. \"*.js\", \"*.{ts,tsx}\")"

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode. Defaults to 'files_with_matches'."

},

"-A": {

"type": "number",

"description": "Number of lines to show after each match"

},

"-B": {

"type": "number",

"description": "Number of lines to show before each match"

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"multiline": {

"type": "boolean",

"description": "Enable multiline mode. Default: false."

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}이 Tool을 Claude가 호출할 때의 tool_use:

{

"type": "tool_use",

"id": "toolu_01ABC123",

"name": "Grep",

"input": {

"pattern": "class.*Entity",

"path": "src/modules",

"glob": "*.ts",

"output_mode": "content",

"-i": true

}

}required에 pattern만 있으므로 나머지는 선택적이다. Claude는 input_schema의 description을 참고하여 적절한 파라미터를 선택한다.

마지막으로 모델 선택과 각종 설정 옵션들이다:

{

"model": "claude-opus-4-5-20251101",

"max_tokens": 32000,

"thinking": {

"budget_tokens": 31999,

"type": "enabled"

},

"stream": true,

"metadata": {

"user_id": "user_2f2ce5dbb94ac27c8da0d0b28dddf815fc82be54e0..."

}

}| 옵션 | 설명 |

|---|---|

model |

사용할 Claude 모델 (claude-opus-4-5, claude-sonnet-4-5 등) |

max_tokens |

최대 출력 토큰 수 |

thinking |

Extended Thinking 설정 (budget_tokens로 사고 토큰 예산 설정) |

stream |

스트리밍 응답 여부 |

metadata |

사용자 ID 등 메타데이터 |

지금까지 Claude API Request Body의 4가지 핵심 구성 요소를 살펴보았다:

이 구조를 이해하면 Claude API를 더 효과적으로 활용할 수 있다.

@ojrask@piipitin.fi

@ojrask@piipitin.fi

@jasonkim@hackers.pub

Claude API의 Request는 크게 4가지 분류를 가지고 있다.

각각은 다음과 같은 역할을 한다.

System Messages는 Claude에게 역할, 성격, 제약사항 등을 지시하는 최상위 설정이다. 배열 형태로 여러 개의 시스템 메시지를 전달할 수 있다.

"system": [

{

"type": "text",

"text": "You are Claude Code, Anthropic's official CLI for Claude.",

"cache_control": {

"type": "ephemeral"

}

},

{

"type": "text",

"text": "You are an interactive CLI tool that helps users with software engineering tasks...",

"cache_control": {

"type": "ephemeral"

}

}

]System Messages에는 다음과 같은 내용이 포함된다:

cache_control을 통한 캐싱 설정Messages는 user와 assistant 역할이 번갈아가며 주고받은 대화를 누적하는 배열이다. assistant 메시지는 반드시 모델의 실제 응답일 필요가 없다. 이를 활요해 API 호출 시 assistant 메시지를 미리 작성해서 전달하면, Claude는 그 내용 이후부터 이어서 응답한다. 이를 Prefill 기법이라 한다.

이 대화 기록을 통해 Claude는 맥락을 유지하며 응답한다.

"messages": [

{

"role": "user",

"content": [...]

},

{

"role": "assistant",

"content": [...]

},

{

"role": "user",

"content": [...]

}

]User의 content는 주로 두 가지 type으로 구성된다:

1. text - 사용자의 일반 메시지나 시스템 리마인더

{

"role": "user",

"content": [

{

"type": "text",

"text": "선물을 주고받는 기능을 위한 entity를 설계하라."

}

]

}2. tool_result - Tool 실행 결과 반환

{

"role": "user",

"content": [

{

"tool_use_id": "toolu_01Qj7gnFLKWBNjg",

"type": "tool_result",

"content": [

{

"type": "text",

"text": "## Entity 구조 탐색 보고서\n\n철저한 탐색을 통해..."

}

]

}

]

}Assistant의 content는 주로 세 가지 type으로 구성된다:

1. text - Claude의 응답 메시지

{

"type": "text",

"text": "선물 주고받기 기능을 위한 entity 설계를 시작하겠습니다."

}2. thinking - Extended Thinking 기능 활성화 시 사고 과정 (signature로 검증)

{

"type": "thinking",

"thinking": "사용자가 선물을 주고받는 기능을 위한 entity 설계를 요청했습니다...",

"signature": "EqskYIChgCKknyFYp5cu1zhVOp7kFTJb..."

}3. tool_use - Tool 호출 요청

{

"type": "tool_use",

"id": "toolu_01Qj7gn6vLKCNjg",

"name": "Task",

"input": {

"subagent_type": "Explore",

"prompt": "이 NestJS TypeScript 프로젝트에서 entity 구조를 탐색해주세요...",

"description": "Entity 구조 탐색"

}

}Tool 사용 흐름은 다음과 같이 진행된다:

tool_use로 Tool 호출 요청tool_result로 실행 결과 반환text 응답 또는 추가 tool_use이 과정에서 어떤 Tool을 사용할 수 있는지는 tools 배열이 정의한다.

Tools는 Claude가 사용할 수 있는 도구들을 정의하는 배열이다. 각 Tool은 name, description, input_schema 세 가지 필드로 구성된다.

"tools": [

{

"name": "ToolName",

"description": "Tool에 대한 설명...",

"input_schema": {

"type": "object",

"properties": {...},

"required": [...],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}

}

]| 필드 | 설명 |

|---|---|

name |

Tool의 고유 식별자. Claude가 tool_use에서 이 이름으로 호출 |

description |

Tool의 용도, 사용법, 주의사항 등을 상세히 기술. Claude가 어떤 Tool을 선택할지 판단하는 근거 |

input_schema |

JSON Schema 형식으로 입력 파라미터 정의 |

input_schema는 JSON Schema draft-07 스펙을 따르며, Tool 호출 시 필요한 파라미터를 정의한다.

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for"

},

"path": {

"type": "string",

"description": "File or directory to search in. Defaults to current working directory."

},

"output_mode": {

"type": "string",

"enum": ["content", "files_with_matches", "count"],

"description": "Output mode: 'content' shows matching lines, 'files_with_matches' shows file paths..."

},

"-i": {

"type": "boolean",

"description": "Case insensitive search"

},

"head_limit": {

"type": "number",

"description": "Limit output to first N lines/entries"

}

},

"required": ["pattern"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}각 파라미터는 다음 필드들로 정의된다:

| 필드 | 설명 |

|---|---|

type |

데이터 타입 (string, number, boolean, array, object 등) |

description |

파라미터의 용도와 사용법 설명 |

enum |

(선택) 허용되는 값의 목록. 이 중 하나만 선택 가능 |

default |

(선택) 기본값 |

| 필드 | 설명 |

|---|---|

type |

항상 "object" |

properties |

파라미터 정의 객체 |

required |

필수 파라미터 이름 배열. 여기 포함되지 않은 파라미터는 선택적 |

additionalProperties |

false면 정의되지 않은 파라미터 전달 불가 |

$schema |

JSON Schema 버전 명시 |

{

"name": "Grep",

"description": "A powerful search tool built on ripgrep\n\n Usage:\n - ALWAYS use Grep for search tasks...",

"input_schema": {

"type": "object",

"properties": {

"pattern": {

"type": "string",

"description": "The regular expression pattern to search for in file contents"

},

"path": {

"type": "string",